Its Day 99 of my 100 Days of Cloud journey and in todays post we’ll take a quick look at some of the announcements coming out of Microsoft Build.

Microsoft Build is an annual event that is primarily focused on the development side of the Microsoft ecosystem, however like all Microsoft events there are normally some really cool announcements around new technologies and updates to existing technologies.

I’m going to focus particularly on updates to the technologies that I’ve blogged about over the last 99 days! In effect, I’m providing some updates to the blog posts so that if you’ve followed me on the journey this far, you’ll get to here and have the latest news and features!

Azure Container Apps

Azure Container Apps is now Generally Available. This enables you to run microservices and containerized apps on a serverless platform.

Common uses of Azure Container Apps include:

- Deploying API endpoints

- Hosting background processing applications

- Handling event-driven processing

- Running microservices

Applications built on Azure Container Apps can dynamically scale based on the following characteristics:

- HTTP traffic

- Event-driven processing

- CPU or memory load

We looked at Azure Container instances on Day 82. The key differences between the 2 are:

- If you need to spin up multiple container (e.g. front end / backend / database), Azure Container Apps is a better choice as it comes with Dapr (Distributed Application Runtime) and it will auto retry the requests and add some telemetry data.

- If you just need long running jobs or you don’t need multiple containers to communicate with each other, you can go with Azure Container Instances.

You can check out the blog post announcement here, and the offical Microsoft Docs page here for more information.

Azure Cosmos DB

We looked at Azure Cosmos DB back on Day 64 and learned that it is a fully managed NoSQL database provides high availability, globally-distributed access to data with very low latency. There are a number of APIs to choose from that best meets the needs of your database requirements.

Some of the new featres announced for CosmosDB are:

- Increased serverless capacity to 1 TB.

- Shared throughput across database partitions.

- Support for hierarchical partition keys.

- An improved 30-day free trial experience, now generally available, and support for MongoDB data in the Azure Cosmos DB Linux desktop emulator.

- A new, free, continuous backup and point-in-time restore capability enables seven-day data recovery and restoration from accidental deletes

- Role-based access control support for Azure Cosmos DB API for MongoDB offers enhanced security.

You can find out more about the Cosmos DB enhancements here.

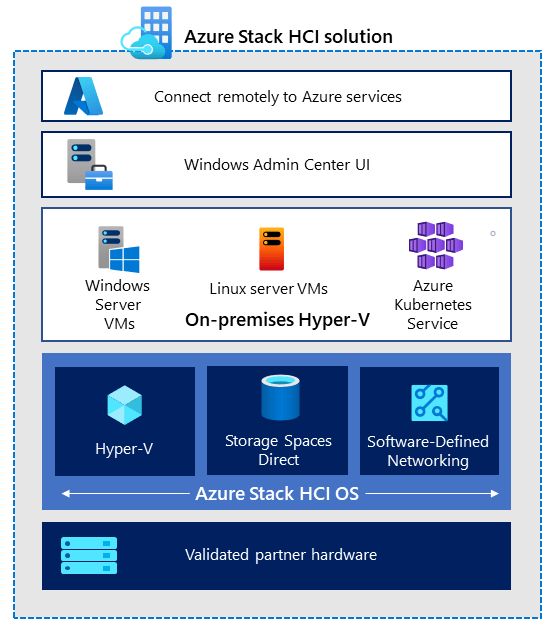

Azure Stack HCI

Its timely that we only looked at Azure Stack HCI on Day 95 and commented that your Azure Stack HCI Cluster can contain between 2 and 16 physical servers.

The new single node Azure Stack HCI, now generally available, fulfills the growing needs of customers in remote locations while maintaining the innovation of native integration with Azure Arc. It offers customers the flexibility to deploy the stack in smaller spaces and with less processing needs, optimizing resources while still delivering quality and consistency.

Additional benefits include:

- Smaller Azure Stack HCI solutions for environments with physical space constraints or that do not require built-in resiliency, like retail stores and branch offices.

- A smaller footprint to reduce hardware and operational costs.

- The same scale applies, so you can start at 1 and scale up to 16 nodes if required.

You can find out more about the AZure Stack HCI announcement here.

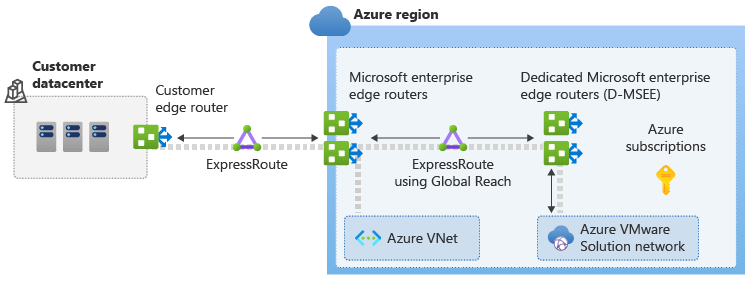

Azure Migrate

On Day 18 we looked at Azure Migrate, which is an Azure technology which automates planning and migration of your on-premise servers from Hyper-V, VMware or Physical Server environments.

Enhancements to the service now streamline and simlify cloud migration and modernization:

- Agentless discovery and grouping of dependent Hyper-V virtual machines (VMs) and physical servers to ensure all required components are identified and included during a move to Azure. This feature is generally available.

- Azure SQL assessment improvements for better customer experience. Assessments now include recommendations for SQL Server on Azure VMs and support for Hyper-V VMs and physical stacks, along with already existing assessments for Azure SQL Managed Instance and Azure SQL Database. This feature is in preview.

- Pause and resume of migration function has been included to provide control over the migration window. This mechanism can be used to schedule migrations during off-peak periods. This feature is in preview.

- Discovery, assessment and modernization of ASP.NET web apps to native Azure Application Service. Customers can discover and modernize an ASP.NET web app to Azure Kubernetes Service (AKS) Application Service Container and discover Java apps running on Apache Tomcat.

Conclusion

So thats a quick rundown of the main updates from Microsoft Build. You can find information on all of the updates that were released here in the Microsoft Build Book of News, and its also not too late to register and watch some of the recorded and on-demand sessions from Microsoft Build by signing up here.

As with all Microsoft Conferences, there’s a CloudSkills Challenge and you have until June 21st to sign up and complete the modules from one of the 8 challenges are available. As always, you can earn a free certification exam pass if you complete the challenge! You can sign up here and the list of rules and exams eligible is here!

Hope you enjoyed this post, until next time!