Its Day 67 of my 100 Days of Cloud Journey, and today I’m looking at Azure Event Hubs.

In the last post, we looked at Azure Event Grid, which is a serverless offering that allows you to easily build applications with event-based architectures. Azure Event Grid contains a number of sources, and one of those is Azure Event Hub.

Whereas Azure Event Grid can take in events from sources and trigger actions based on those events, Azure Event Hubs is a big data streaming platform and event ingestion service. It can receive and process millions of events per second. Data sent to an event hub can be transformed and stored by using any real-time analytics provider or batching/storage adapters.

One of the key difference between the 2 services is that while Event Grid can plug directly into Azure services and listen for events coming from their sources, Event Hubs can listen for events coming from sources outside of Azure, and can handle millions of events coming from multiple devices.

The following scenarios are some of the scenarios where you can use Event Hubs:

- Anomaly detection (fraud/outliers)

- Application logging

- Analytics pipelines, such as clickstreams

- Live dashboards

- Archiving data

- Transaction processing

- User telemetry processing

- Device telemetry streaming

Concepts

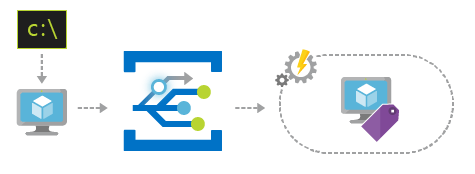

Azure Event Hubs represent the “front door” (or Event Ingestor to give it the correct name) for an event pipeline, and sits between event producers and event consumers. It decouples the process of producing data from the process of consuming data. You can publish events individually or in batches.

Azure Event Hubs are built around the concept of partitions and consumer groups. Inside an Event Hub, events are sent to partitions by specifying the partition key or partition id. Partition count of an Event Hub cannot be changed after creation so is mindful of this limitation.

Receivers are grouped into consumer groups. A consumer group represents a view (state, position, or offset) of an entire event hub. It can be thought of as a set of parallel applications that consume events at the same time.

Consumer groups enable receivers to each have a separate view of the event stream. They read the stream independently at their own pace and with their own offsets. Event Hub uses a partitioned consumer pattern; events are spread across partitions to allow horizontal scale. Events can be stored in either Blob Storage or Data Lake, this is configured when the initial event hub is created.

Use Cases

Event Hubs is the component to use for real-time and/or streaming data use cases:

- Real-time reporting

- Capture streaming data into files for further processing and analysis – e.g. capturing data from micro-service applications or a mobile app

- Make data available to stream-processing and analytics services – e.g. when scoring an AI algorithm

- Telemetry streaming & processing

- Application logging

Event Hubs is also available as a feature for Azure Stack Hub, which allows you to realize hybrid cloud scenarios. Streaming and event-based solutions are supported, for both on-premises and Azure cloud processing.

Conclusion

You can learn more about Event Hubs in the Microsoft documentation here. Hope you enjoyed this post, until next time!