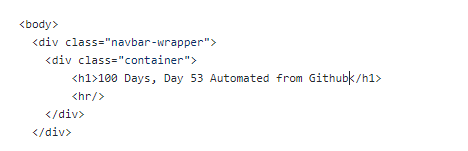

Its Day 57 of my 100 Days of Cloud journey, and today I’m taking a look at Azure Conditional Access.

In the last post, we looked at the state of MFA adoption across Microsoft tenancies, and the different feature offerings that are available with the different types of Azure Active Directory License. We also saw that if your licences do not include Azure AD Premium P1 or P2, its recommended you upgrade to one of these tiers to include Conditional Access as part of your MFA deployment.

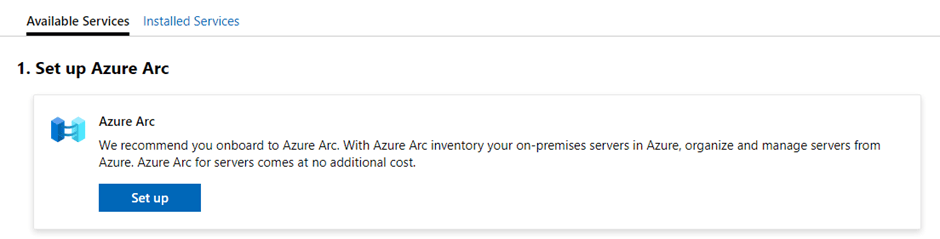

Lets take a deeper look at what Conditional Access is, and why its an important component in securing access to your Azure, Office365 or Hybrid environments.

Overview

Historically, IT Environments were located on-premise, and companies with multiple sites communicated with each other using VPNs between sites. So in that case, you needed to be inside one of your offices to access any Applications or Files, and a Firewall protected your perimeter against attacks. In vary rare cases, a VPN Client was provided to those users who needed remote access and this needed to be connected in order to access resources.

Thats was then. These days, the security perimeter now goes beyond the organization’s network to include user and device identity.

Conditional Access uses signals to make decisions and enforce organisational policies. The simplest way to describe them is as “if-then” statements:

- If a user wants to access a resource,

- Then they must complete an action.

It impotant to note that conditional access policies shouldn’t be used as a first line of defense and is only enforced after the first level of authentication has completed

How it works

Conditional Access uses signals that are taken into account when making a policy decision. The most common signals are:

- User or group membership:

- Policies can be targeted to specific users and groups giving administrators fine-grained control over access.

- IP Location information:

- Organizations can create trusted IP address ranges that can be used when making policy decisions.

- Administrators can specify entire countries/regions IP ranges to block or allow traffic from.

- Device:

- Users with devices of specific platforms or marked with a specific state can be used when enforcing Conditional Access policies.

- Use filters for devices to target policies to specific devices like privileged access workstations.

- Application:

- Users attempting to access specific applications can trigger different Conditional Access policies.

- Real-time and calculated risk detection:

- Signals integration with Azure AD Identity Protection allows Conditional Access policies to identify risky sign-in behavior. Policies can then force users to change their password, do multi-factor authentication to reduce their risk level, or block access until an administrator takes manual action.

- Microsoft Defender for Cloud Apps:

- Enables user application access and sessions to be monitored and controlled in real time, increasing visibility and control over access to and activities done within your cloud environment.

We then combine these signals with decisions based on the evaluation of the signal:

- Block access

- Most restrictive decision

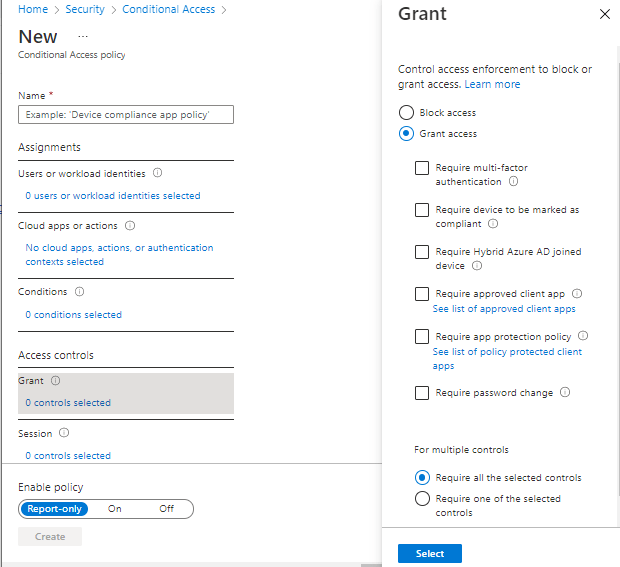

- Grant access

- Least restrictive decision, can still require one or more of the following options:

- Require multi-factor authentication

- Require device to be marked as compliant

- Require Hybrid Azure AD joined device

- Require approved client app

- Require app protection policy (preview)

- Least restrictive decision, can still require one or more of the following options:

When the above combinations of signals and decisions are made, the most commonly applied policies are:

- Requiring multi-factor authentication for users with administrative roles

- Requiring multi-factor authentication for Azure management tasks

- Blocking sign-ins for users attempting to use legacy authentication protocols

- Requiring trusted locations for Azure AD Multi-Factor Authentication registration

- Blocking or granting access from specific locations

- Blocking risky sign-in behaviors

- Requiring organization-managed devices for specific applications

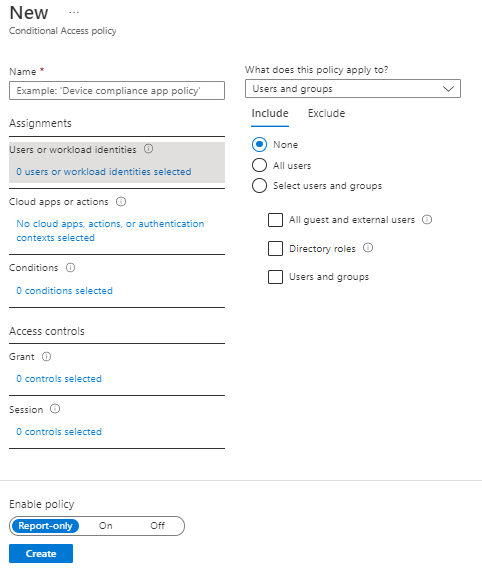

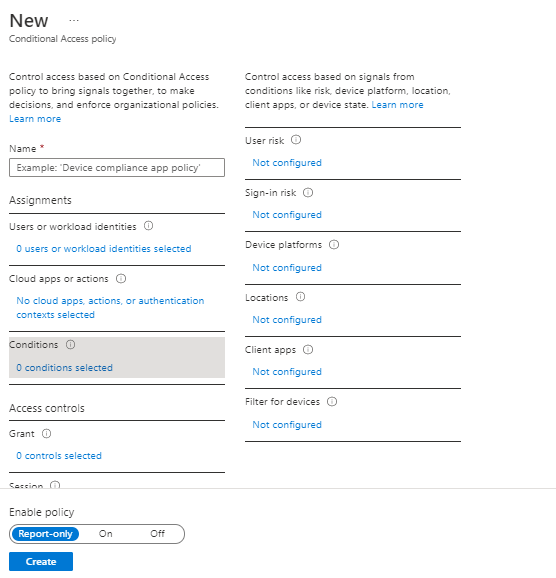

If we look at the Conditional Access blade under Security in Azure and select “Create New Policy”, we see the options avaiable for creating a policy. The first 3 options are under Assignments:

- Users or workload identities – this defines users or groups that can have the policy applied, or who can be excluded from the policy.

- Cloud Apps or Actions – here, you select the Apps that the policy applies to. Be careful with this option! Selecting “All cloud apps” also affects the Azure Portal and may potentially lock you out:

- Conditions – here we assign the conditions sich as locations, device platforms (eg Operating Systems)

The last 2 options are under Access Control:

- Grant – controls the enforcement to block or grant access

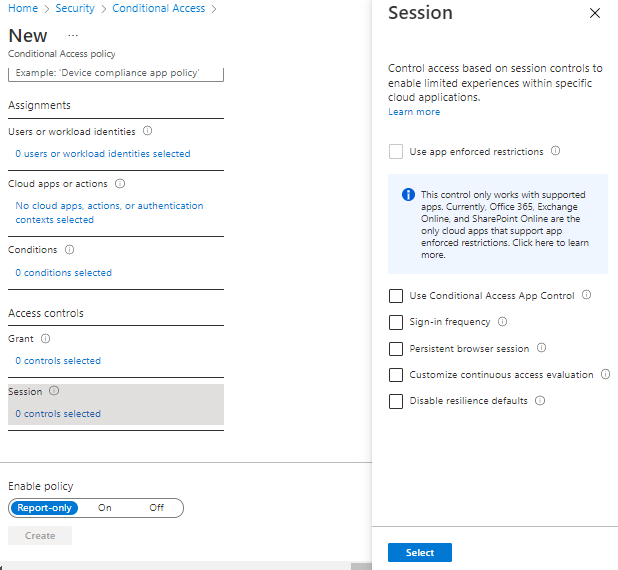

Session – this controls access such as time limited access, and browser session controls.

We can also see from the above screens that we can set the policy to “Report-only” mode – this is useful when you want to see how a policy affects your users or devices before it is fully enabled.

Conclusion

You can find more details on Conditional Access in the official Microsoft documentation here. Hope you enjoyed this post, until next time!