In today’s post on my 100 days of Cloud journey, I’m going to talk about Azure Active Directory and RBAC Roles.

Anyone who has followed me so far on the journey is probably asking why I haven’t deployed or built anything yet. Isn’t that what the whole “100 Days” challenge is all about? I’m getting there, I will be deploying something in the coming days! But its important to firstly prepare our environment for use and understand the different layers and base level requirements before we build anything.

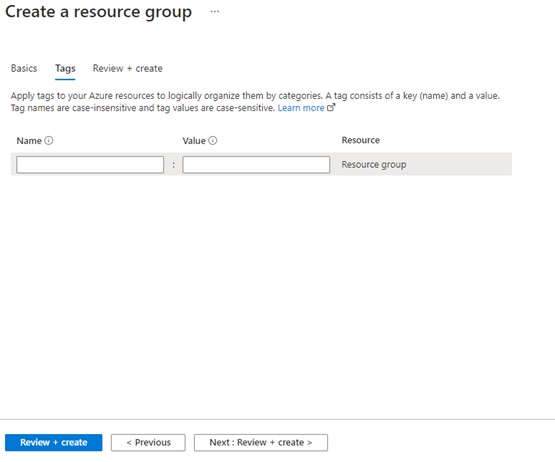

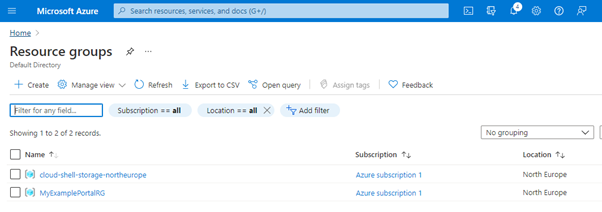

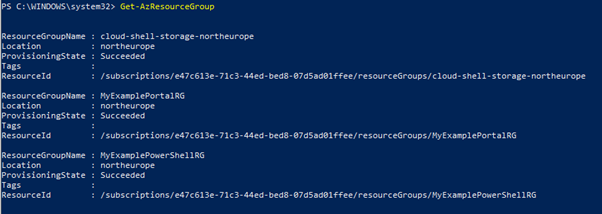

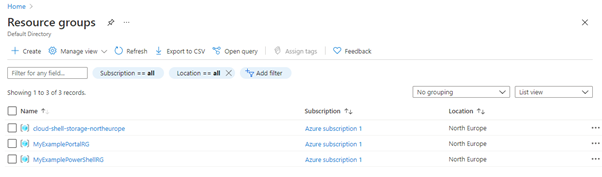

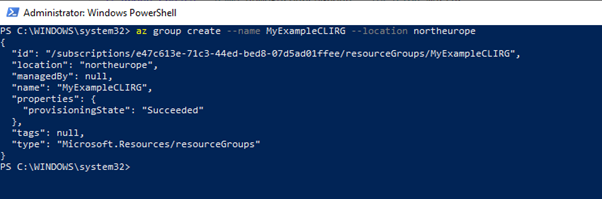

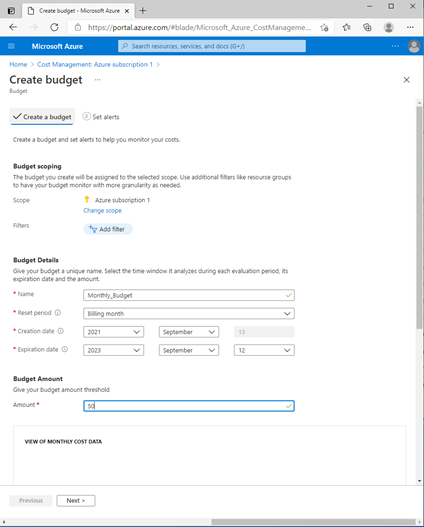

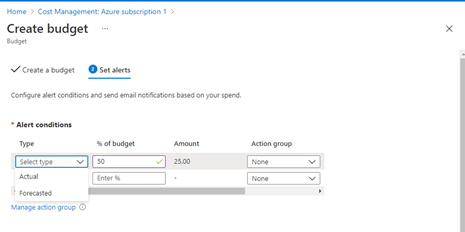

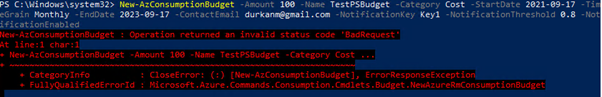

In Day 2, I created an Azure Account on the Portal and set up Cost Management and Budget Alerts. Day 3 talked about Resource Groups where we can group resources that are relevant to Project Teams, Departments or Locations.

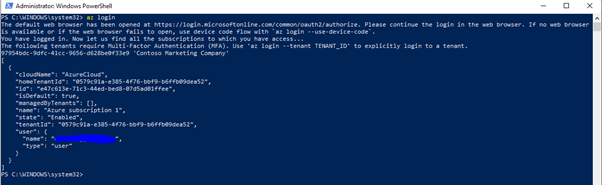

Azure Active Directory

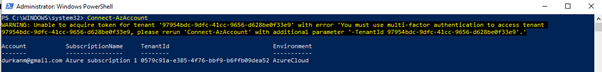

Every Azure environment is built on top of an Azure Active Directory

(Azure AD) tenant. Azure AD is Microsoft’s cloud-based identity and access management service, and when you sign up for an Azure Subscription, you automatically get an Azure Active Directory instance.

Now lets stop here for a minute because something sounds familiar here …. Active Directory! I know all about that! Domains, Hierarchy, GPO’s!

No. Its not the same as the Active Directory that on-premise admins would be used to managing. Active Directory has a Hierarchical Structure, where you can create OU’s relevant to Locations or Jobs Roles, add users, groups or computers to those OUs and manage those elements using Permissions Assignments or Group Policy Objects.

Azure Active Directory still has Users, Groups, Authentication and Authorization, but it uses the concept of Role Based Access Control (RBAC). There are a large number of predefined RBAC Roles defined, and I’ll try to explain how those work in the last section.

A quick note first — even though Active Directory and Azure Active Directory are distinctly different from an architecture perspective, they can talk to each other. The best real world example of this is in a Hybrid Office 365 deployment, where you use Azure AD Connect to sync on-premise users to Azure Active Directory for use with services such as Exchange Online, SharePoint and Teams.

Use Case

RBAC allows you to grant users the exact roles they need to do their jobs while striking the right balance between autonomy and corporate governance.

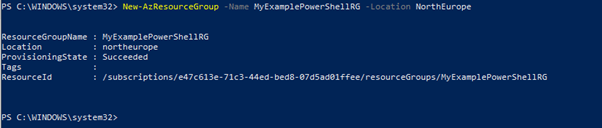

Lets get our use case for this — like Day 3, I want to run a Virtual Machine and its needs to run in a specific region (eg East US). I would create a Resource Group in East US, then create the resources required for the Virtual Machine (Storage Account, Virtual Network, and the Virtual Machine itself) within that Resource Group. However, the machine is running an Application, so it needs both a Website at the Front End, and an SQL Database at the back end to store the application data.

As you can see, we have a large number of responsibilities and different technologies in play here. What RBAC will allow us to do with this scenario is as follows:

- Allow one user to manage virtual machines and another user to manage virtual networks in the entire subscription.

- Allow a database administrator group to manage SQL databases in the resource group only.

- Allow a user to manage all resources in a resource group, such as virtual machines, websites, and subnets

- Allow an application to access all resources in a resource group

How RBAC works

RBAC works using the concept of Role Assignments, which controls how permissions are enforced. This uses 3 elements, which are:

- Security Principal (Who) — a user, group or application that you want to grant access to.

- Role Definition (What) — this is a collection of permissions. Roles can be high level (eg Owner) or specific (eg Virtual Machine Contributor).

Azure includes several built-in roles that you can use. The following lists four fundamental built-in roles:

- Owner — Has full access to all resources, including the right to delegate access to others.

- Contributor — Can create and manage all types of Azure resources, but can’t grant access to others.

- Reader — Can view existing Azure resources.

- User Access Administrator — Lets you manage user access to Azure resources.

If the built-in roles don’t meet the specific needs of your organization, you can create your own custom roles. A full list of built-in roles can be found here.

3. Scope (Where) — Scope is where the access applies to. You can apply the scope at multiple levels:

- Management Group

- Subscription

- Resource Group

- Resource

When you grant access at a parent scope, this is inherited by all child scopes.

RBAC is an allow-based model — this means that if you apply “Virtual Machine Reader” at Subscription Level and “Virtual Machine Contributor” at Resource Group Level, you will have Contributor rights at the Resource Group level

Conclusion

And that my friends is a very high level overview of how Azure Active Directory and RBAC works. In the coming days, I’ll be using RBAC to control access to the items I deploy along the cloud journey. Yes, we’re close to deploying something! Maybe next time — come back and find out!

Hope you enjoyed this post, until next time!!