Its Day 86 of my 100 Days of Cloud journey, and in todays post I’m going to give an introduction to Kubernetes.

We introduced Containers on Day 81 and gave an overview of how they work and how they differ in architecture when compared to traditional Bare Metal Physical or Virtual Infrastructure. A container is a lightweight environment that can be used to build and securely run applications and their dependencies. We need container management tools such as Docker to run commands and manage our containers.

Containers Recap

We saw how easy it is to deploy and manage containers during the series where I built a monitoring system using a telegraf agent to pull data into an InfluxDB docker container, and then used a Grafana Container to display metrics from the time series database.

So lets get back to that for a minute and understand a few points about that system:

- The Docker Host was an Ubuntu Server VM, so we can assume that it ran in a highly available environment – either an on-premises Virtual Cluster such as Hyper-V or VMware or on a Public Cloud VM such as an Azure Virtual Machine or an Amazon EC2 Instance.

- It took data in from a single datasource, which was brought into a single time series database, which then was presented on a single dashboard.

- So altogether we had 1 host VM and 2 containers. Because the containers and datasource were static, there was no need for scaling or complex management tasks. The containers were run with persistent storage configured, the underlying apps were configured and after that the system just happily ran.

So in effect, that was a static system that required very little or no management after creation. But we also had no means of scaling it if required.

What if we wanted to build something more complex, like a an application with multiple layers where there is a requirement to scale out apps, and respond to increased demand by deploying more container instances, and to scale back if demand is decreasing?

This is where container orchestration technologies are useful because they can handle this for you. A container orchestrator is a system that automatically deploys and manages containerized apps. It can dynamically respond to changes in the environment to increase or decrease the deployed instances of the managed app. Or, it can ensure all deployed container instances get updated if a new version of a service is released.

And this is where Kubernetes comes in!

Kubernetes Overview

Kubernetes is an open-source platform created by Google for managing and orchestrating containerized workloads. Kubernetes is also known as “K8s”, and can run any Linux container across private, public, and hybrid cloud environments. Kubernetes allows you to build application services that span multiple containers, schedule those containers across a cluster, scale those containers, and manage the health of those containers over time.

The benefits of using Kubernetes are:

- Horizontal Scaling

- Auto Scaling

- Health check & Self-healing

- Load Balancer

- Service Discovery

- Automated rollbacks & rollouts

Its important to note though that all of these tasks require configuration and a good understanding of the underlying technologies. You need to understand concepts such as virtual networks, load balancers, and reverse proxies to configure Kubernetes networking.

Kubernetes Components

A Kubernetes cluster consists of:

- A set of worker machines, called nodes, that run containerized applications. Every cluster has at least one worker node.

- A master node or control plane manages the worker nodes and the Pods in the cluster

Lets take a look at the components that are contained in each of these components

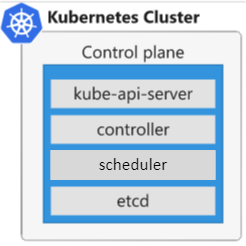

Control Plane or Master Node

The following services make up the control plane for a Kubernetes cluster:

- API server – the front end to the control plane in your Kubernetes cluster. All the communication between the components in Kubernetes is done through this API.

- Backing store – used by Kubernetes to save the complete configuration of a Kubernetes cluster. A key-value store called

etcdstores the current state and the desired state of all objects within your cluster. - Scheduler – responsible for the assignment of workloads across all nodes. The scheduler monitors the cluster for newly created containers, and assigns them to nodes.

- Controller manager – tracks the state of objects in the cluster. There are controllers to monitor nodes, containers, and endpoints.

- Cloud controller manage – integrates with the underlying cloud technologies in your cluster when the cluster is running in a cloud environment. These services can be load balancers, queues, and storage.

Worker Machines or Nodes

The following services run on the Kubernetes node:

- Kubelet – The kubelet is the agent that runs on each node in the cluster, and monitors work requests from the API server. It monitors the nodes and makes sure that the containers scheduled on each node run, as expected.

- Kube-proxy – The kube-proxy component is responsible for local cluster networking, and runs on each node. It ensures that each node has a unique IP address.

- Container runtime – the underlying software that runs containers on a Kubernetes cluster. The runtime is responsible for fetching, starting, and stopping container images.

Pods

Unlike in a Docker environment, you can’t run containers directly on Kubernetes. You package the container into a Kubernetes object called a pod, which is effectively a container with all of the management overhead stripped away and passed back to the Kubernetes Cluster.

A pod can contain multiple containers that make up part of or all of your application, however in general a pod will never contain multiple instances of the same application. So for example, if running a website that requires a database back-end, both of those containers would be packaged into a pod.

A pod also includes information about the shared storage and network configuration, and yaml coded tempates which define how to run the containers in the pod.

Managing your Kubernetes environment

You have a number of options for managing your Kubernetes environment:

- kubectl – You can use kubectl to deploy applications, inspect and manage cluster resources, and view logs. kubectl can be installed on Linux, macOS and Windows platforms.

- kind – this is used for running Kubernetes on your local device.

- minikube – similar to kind in that it allows you to run Kubernetes locally.

- kubeadm – this is used to create and manage kubernetes clusters in a user friendly way.

kubectl is by far the most used in enterprise Kubernetes environments, and you can find more details in the documentation here.

Important Considerations

While Kubernetes provides an orchestration platform that means you can run your clusters and scale as required, there are certain things you need to be aware that it cannot do, such as:

- Deployment, scaling, load balancing, logging, and monitoring are all optional. You need to configure these and fit these into your specific solution requirements.

- There is no limit to the tyes of apps that can run – if it can run in a container, it can run on Kubernetes.

- Kubernetes doesn’t provide middleware, data-processing frameworks, databases, caches, or cluster storage systems.

- A container runtime such as Docker is required for managing containers.

- You need to manage the underlying environment that Kubernetes runs on (memory, networking, storage etc), and also manage upgrades to the Kubernetes platform itself.

Azure Kubernetes Service

All of the above considerations and indeed all of the sections we’ve covered in this post require detailed knowledge of both Kubernetes and also the underlying dependencies. This overhead is removed in some part by cloud services such Azure Kubernetes Service (AKS) which reduces these challenges by providing a hosted Kubernetes environment.

As a hosted Kubernetes service, Azure handles critical tasks, like health monitoring and maintenance. Since Kubernetes masters are managed by Azure, you only manage and maintain the agent nodes.

You can create an AKS cluster using:

- The Azure CLI

- The Azure portal

- Azure PowerShell

- Using template-driven deployment options, like Azure Resource Manager templates, Bicep and Terraform.

When you deploy an AKS cluster, the Kubernetes master and all nodes are deployed and configured for you. Advanced networking, Azure Active Directory (Azure AD) integration, monitoring, and other features can be configured during the deployment process.

Conclusion

And thats a description of Kubernetes, how it works, why its useful and the components that are contained within it. In the next post, we’re going to put all that theory into practice and set up both a local Kubernetes Cluster using minikube, and also look at deploying cluster onto Azure Kubernetes Service.

Hope you enjoyed this post, until next time!