Its Day 34 of on 100 Days of Cloud, and in todays post I’m starting my learning journey on Infrastructure as Code (IaC).

Infrastructure as Code is one of the phrases we’ve heard a lot about in the last few years as the Public Cloud has exploded. In one of my previous posts on AWS, I gave a brief description of AWS CloudFormation, which is the built-in AWS Tool that was decribed as:

- Infrastructure as Code tool, which uses JSON or YAML based documents called CloudFormation templates. CloudFormation supports many different AWS resources from storage, databases, analytics, machine learning, and more

I’ll go back to cover AWS CloudFormation at a later date when I get more in-depth into AWS. For today and the next few days, I’m heading back across into Azure to see how we can use HashiCorp Terraform to deploy and manage infrastructure in Azure.

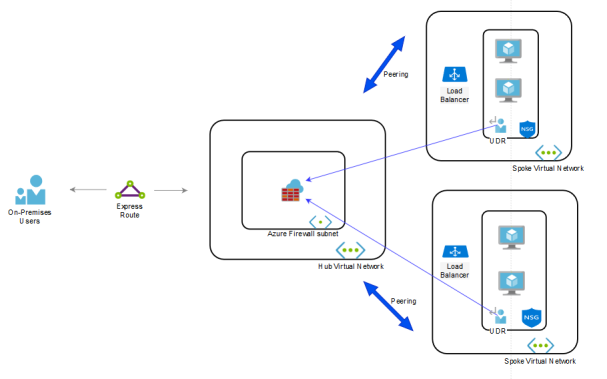

In previous posts on Azure, we looked at the 3 different ways to deploy Infrastructure in Azure:

Over the coming days, we’ll look at deploying, changing and destroying existing infrastructure in Azure using Infrastructure as Code using Terraform.

Before we move on….

Now before we go any further and get into the weeds of Terraform and how it works, I want to allay some fears.

When people see the word “Code” in a service description, the automatic assumption is that you need to be a developer to understand and be competent in using this method of deploying infrastructure. As anyone who knows me and those of you who have read my bio know, I’m not a developer and don’t have a development background.

And I don’t need to be in order to use tools like Terraform and CloudFormation. There are loads of useful articles and training courses out there which walks you through using these tools and understanding them. The best place to start is the official HashiCorp Learn site, which gives learning patch for all the major Cloud providers (AWS/Azure/GCP) and also for Docker, Oracle and Terraform Cloud. If you search for HashiCorp Ambassadors such as Michael Levan and Luke Orrelana, they have Video Content on YouTube, CloudAcademy and Cloudskills.io which walks you through the basics of Terraform.

Fundamentals of Terraform

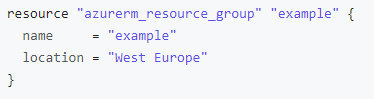

Terraform was originally programmed using JSON, but then switched to use HCL, which stands for HashiCorp Configuration Language. Its very similar to JSON, but has additional capabilities built in. While JSON and YAML are more suited for Data Structures, HCL used syntax that is specifically designed for building structured configuration.

One of the main things we need to understand before moving forward with Terraform is what the above means.

HCL is declarative programming language – this means that we define what needs to be done and the results that we expect to see, instead of telling the program how to do it (which is imperative programming). So if we look at the example HCL config of an Azure Resource Group below, we see that we need to provide specific values:

When Terraform is used to deploy infrastructure, it creates a “state” file that defines what has been deployed. So if you deploy with Terraform, you need to manage with Terraform also. Making changes to any infrastructure directly can cause corruption in Terraform configuration files and may lead to losing your Infrastructure.

For Azure users, the latest version of Terraform is already build into the Azure Cloud Shell. In order to get Terraform working on your machine, we need to follow these steps:

- Go to Terraform.io and download the CLI.

- Extract the file to a folder, and then create a System Environment Variable that points to it.

- Open PowerShell and run

terraform versionto make sure it is installed. - Install the Hashicorp Terraform extension in VS Code

Conclusion

So thats the basics of Terraform. In the next post, we’ll be running throug the 4 steps to install Terraform on our machine, show how we get connected into Azure from VS Code and then start looking at Terraform Configuration Files and Providers. Until next time!