Its Day 49 of my 100 Days of Cloud Journey, and today I’m looking at how we can manage Azure Services from Windows Admin Center, either on and Azure or on-premise based installation.

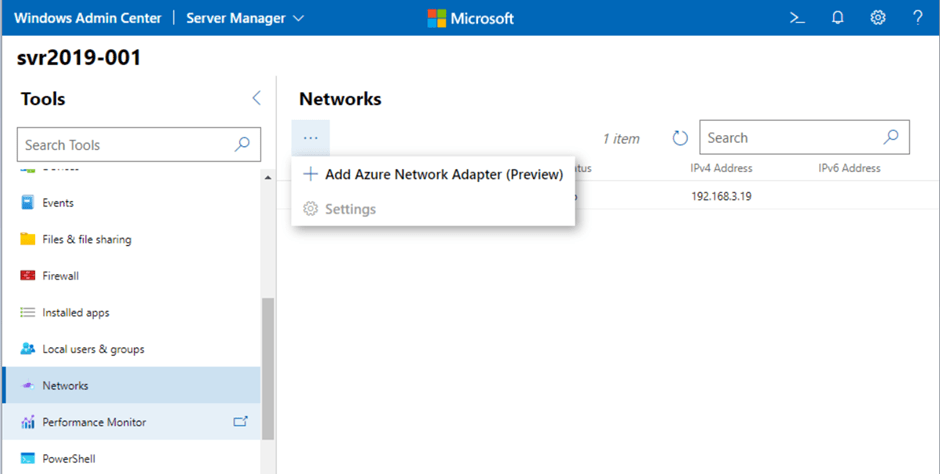

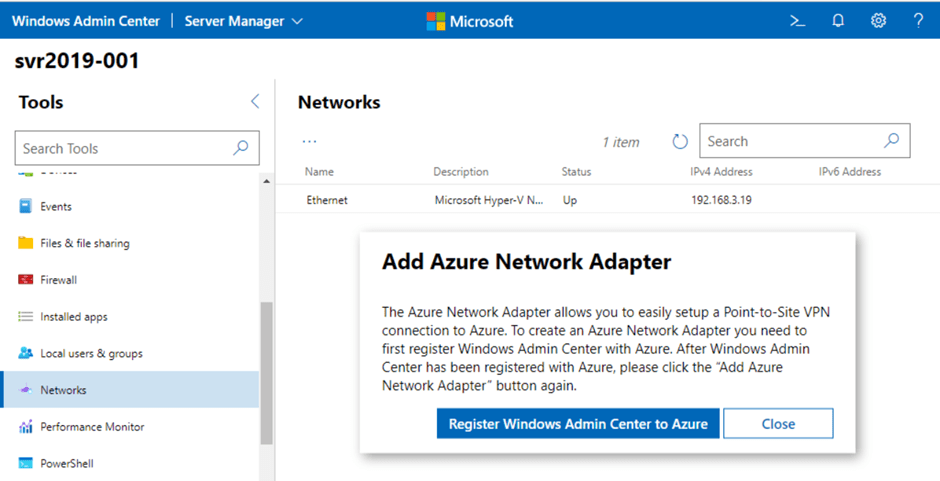

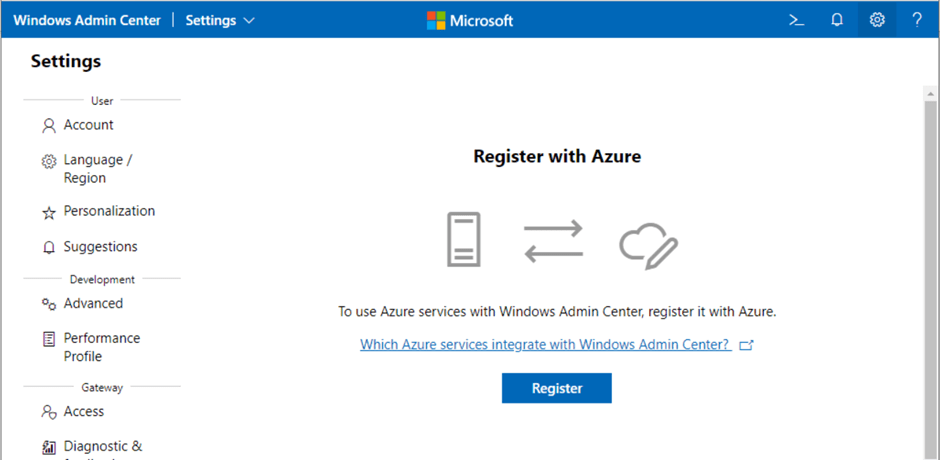

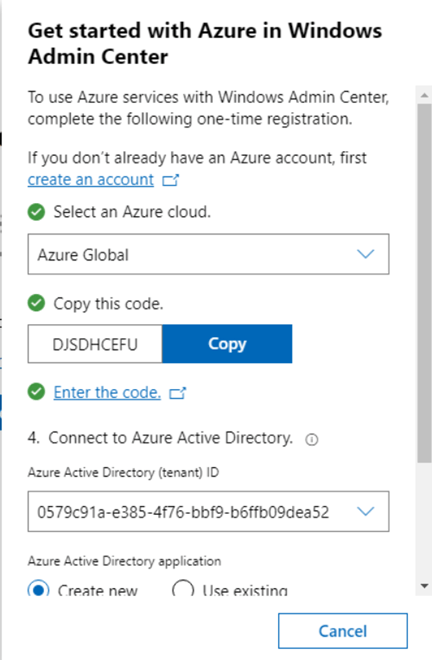

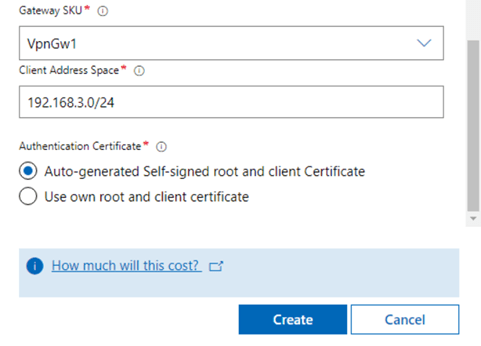

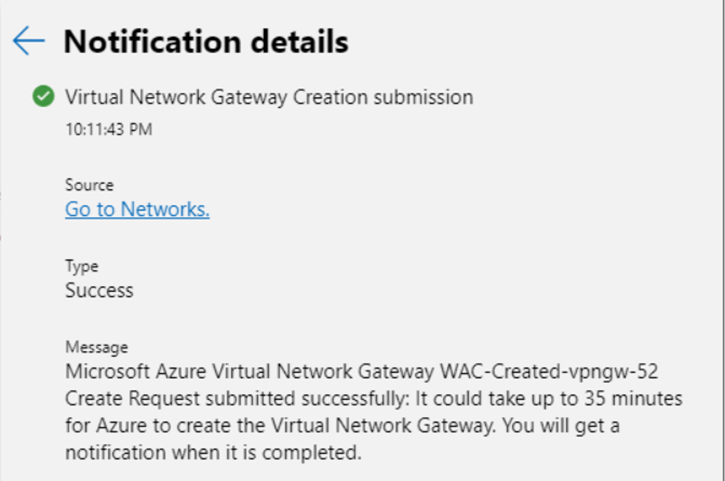

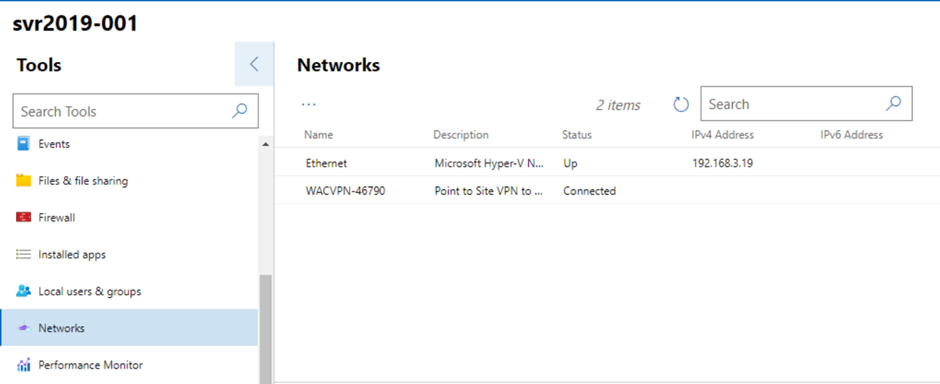

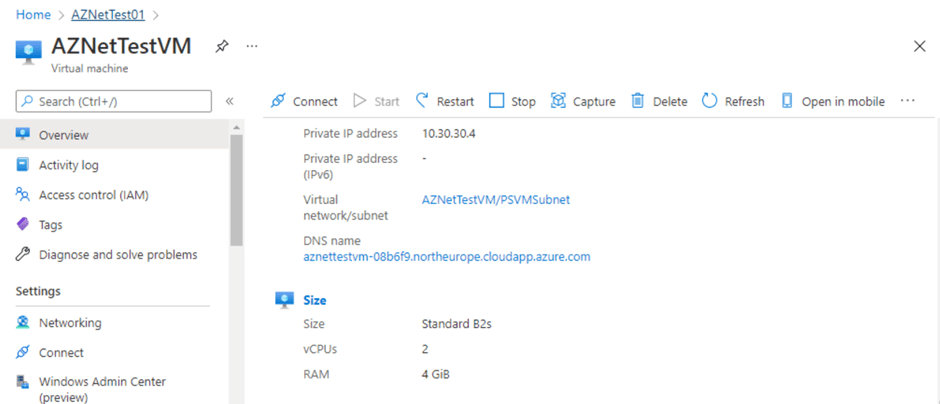

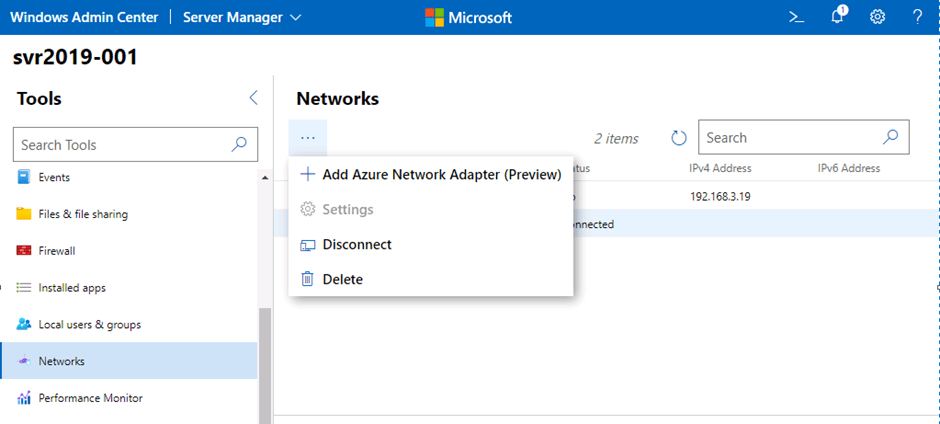

In the previous post, we saw how we can use Azure Network Adapter to connect an on-premise server directly to an Azure Virtual Network using a Point to Site VPN Connection. However when we looked at our Server in the Windows Admin Center console, we saw a number of Azure options on the side menu.

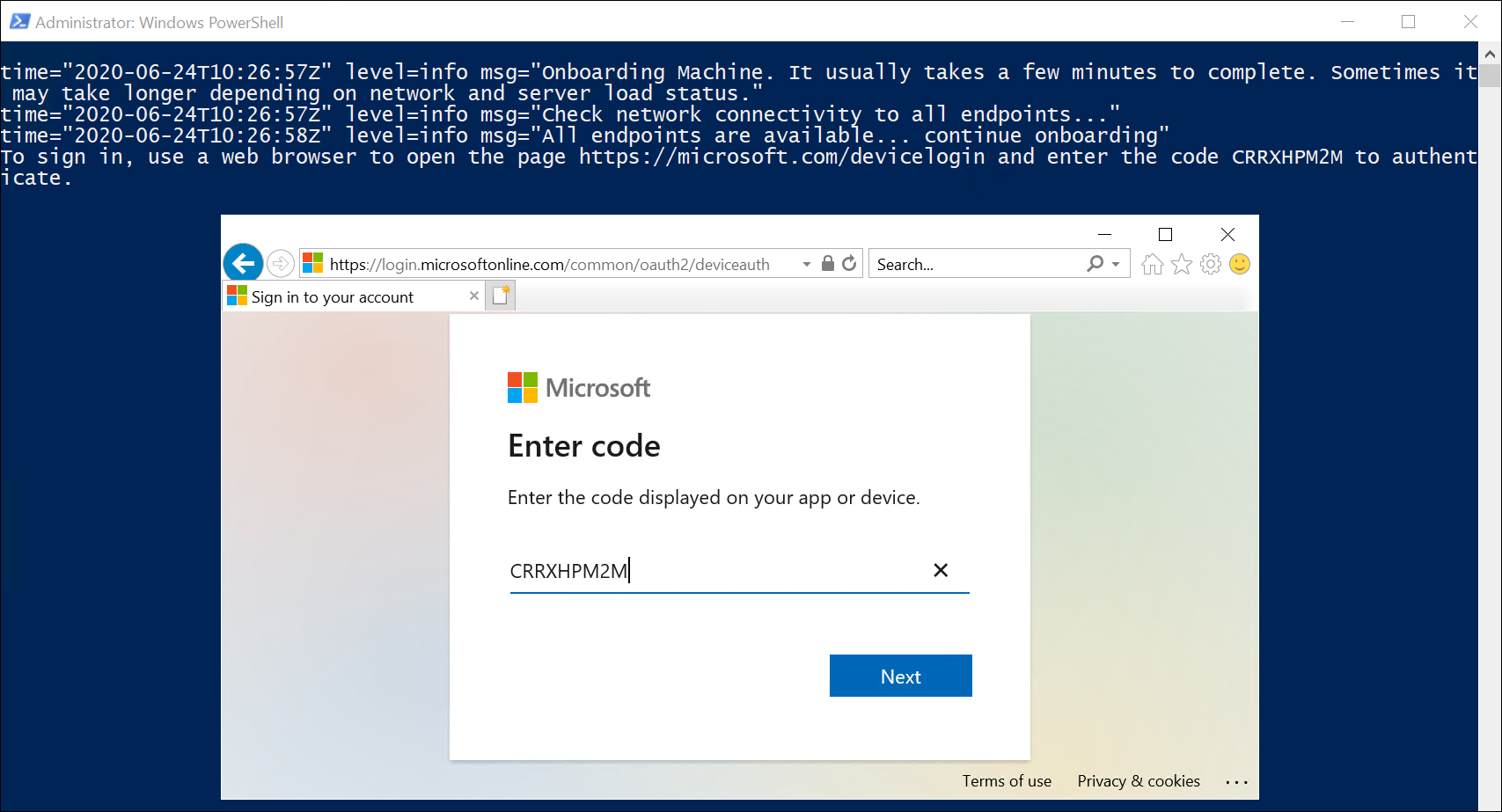

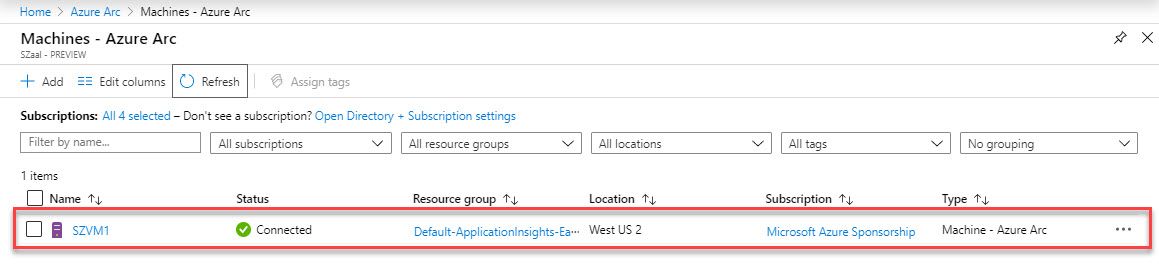

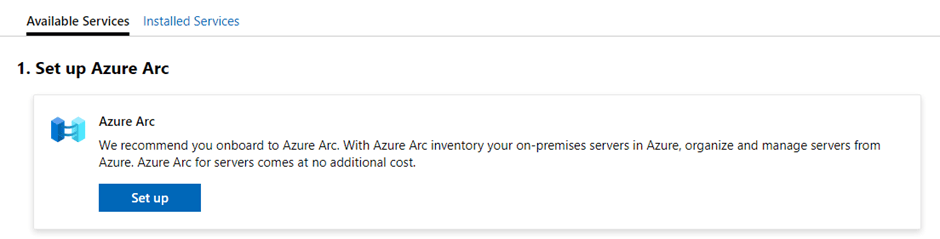

Lets take a look at each of these menu options and what we have available. We’ll start with “Azure hybrid center”, when we click into this, the first option we see is a prompt to onboard the server to Azure Arc (we discussed the benefits of Azure Arc on Day 44):

Once we have onboarded to Azure Arc, we scroll down and see a number of other services available to us:

The list of services available here are:

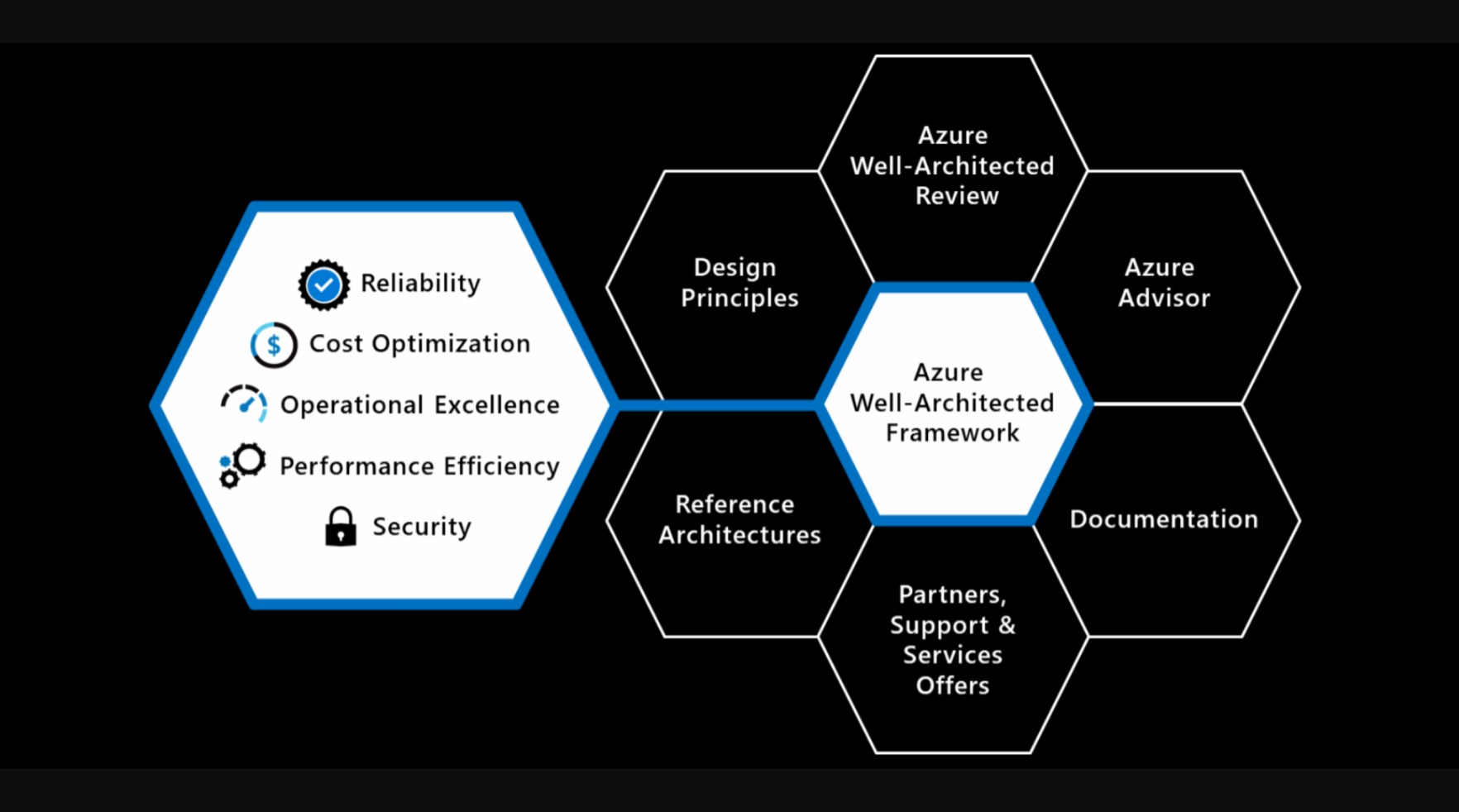

- Azure Site Recovery, which we covered in Day 19.

- Azure Update Management, which allows us to manage Windows Updates on Azure Arc registered servers.

- Azure File Sync, which allows us to host data in Azure File Shares and then sync data to on-premise servers (blog post coming up on that!)

- Azure Extended Network, which allows us to extend our on-premise networks into Azure so that migrated VMs can keep their original IP Addresses.

- Azure Monitor, which gives us full monitoring of our applications, infrastructure and networks (need a blog post about that too!).

- Azure Backup, which we covered in Day 23.

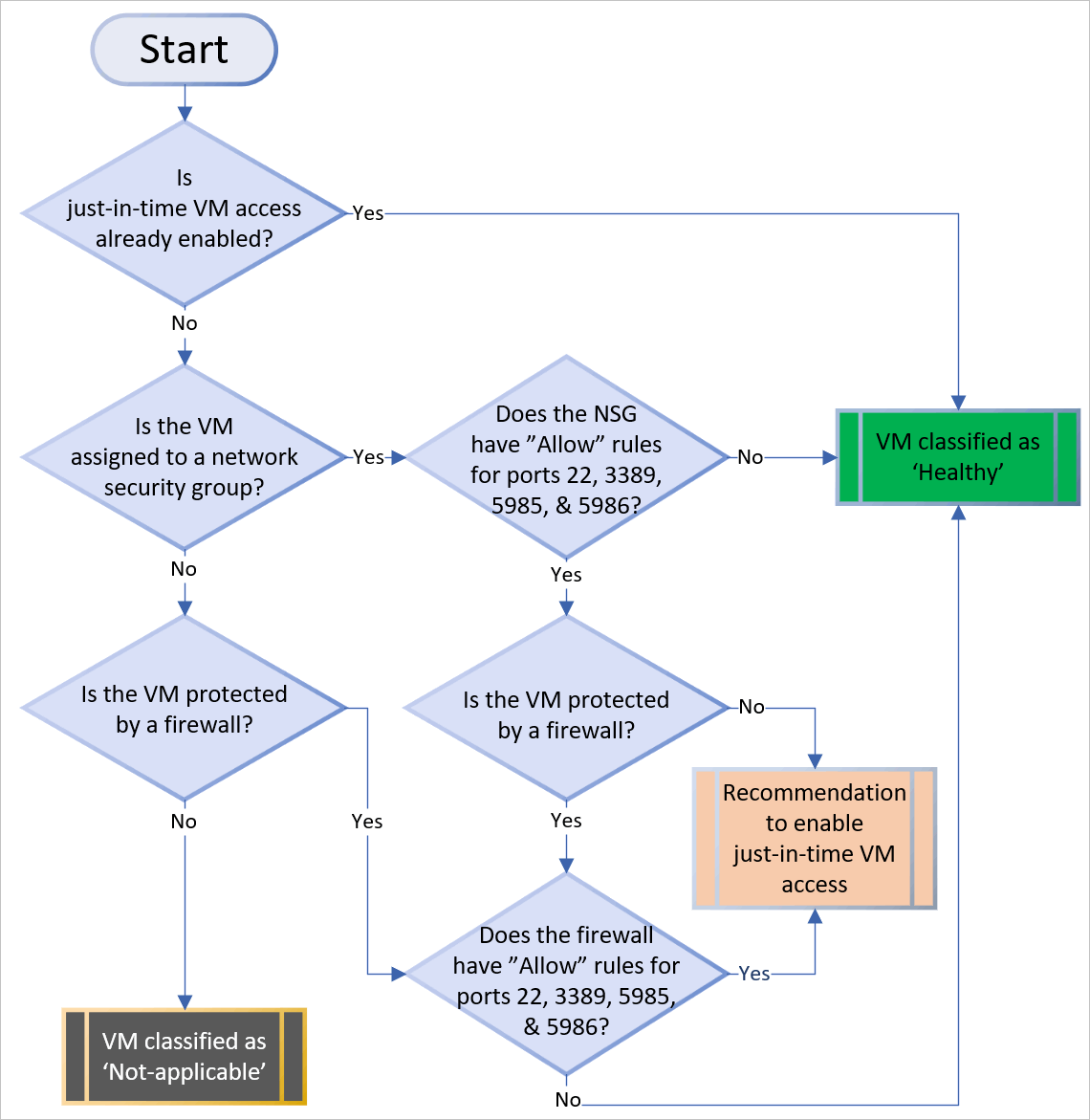

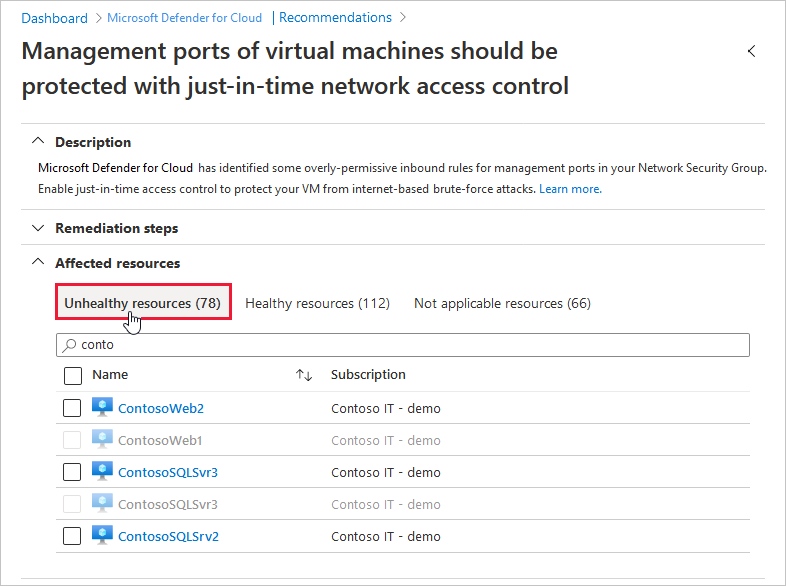

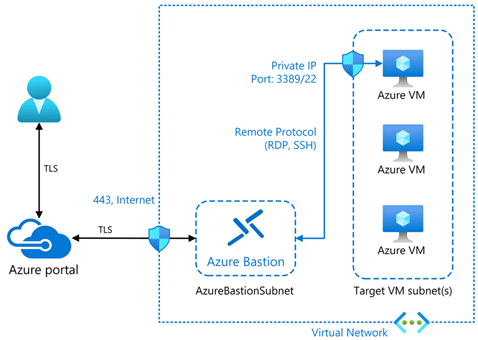

- Azure Security Center, which monitors security across hybrid workloads, applies policies and compliance baselines, blocks malicious activity, detects attacks and simplifies detection and remediation (another blog post needed on that!)

Wow, gave myself lots more work to do out of that!

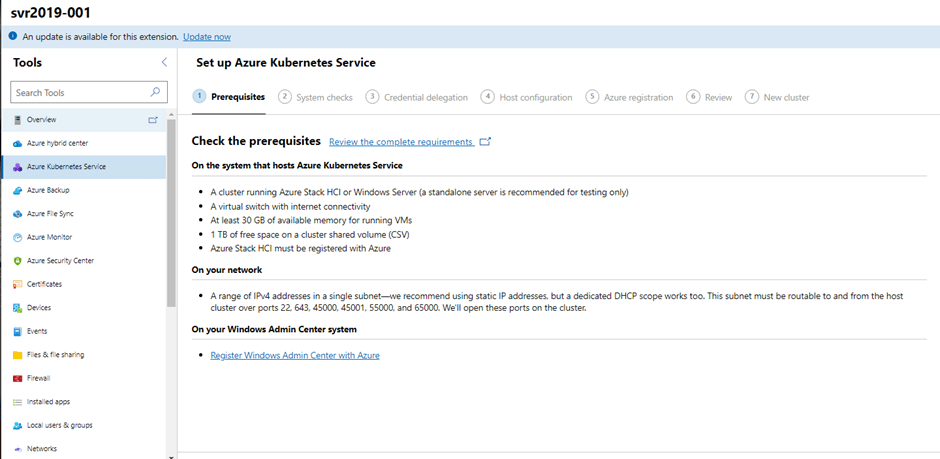

A lot of the above options are part of the main menu, the one thats not mentioned above and is on the main menu is Azure Kubernetes Service (or AKS for short). Azure Kubernetes Service is a managed container orchestration service based on the open source Kubernetes system, which is available on the Microsoft Azure public cloud. … AKS is designed for organizations that want to build scalable applications with Docker and Kubernetes while using the Azure architecture.

When we click on the menu option, we can see the option is available to deploy a AKS Cluster:

I’m not going to delve in AKS in too much detail here (yes you’ve guessed it, its another blog post….. )

We can see how Windows Admin Center can provide a single management pane for Hybrid Services. You can check out this excellent post from Thomas Maurer’s blog for video descripions of how to use each service with Windows Admin Center.

Hope you enjoyed this post, lots more posts to come out of this one! Until next time!